The information in this section explains configuring container networks within the Docker default bridge. This is a bridge network named bridge createdautomatically when you install Docker.

Note: The Docker networks feature allows you to create user-defined networks in addition to the default bridge network.

This article discusses four ways to make a Docker container appear on a local network. Apr 01, 2020 sudo docker exec -d leaf12 ip netns exec swnet sysctl net.ipv6.conf.swport5.disableipv6=1 sudo docker exec -d leaf12 ip netns exec swnet ip link set swport5 up sudo docker exec -d leaf12 ip link set dev swport6 netns swnet. Docker is a platform for developers and sysadmins to build, run, and share applications with containers. A container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another.

While Docker is under active development and continues to tweak and improve its network configuration logic, the shell commands in this section are rough equivalents to the steps that Docker takes when configuring networking for each new container.

Review some basics

To communicate using the Internet Protocol (IP), a machine needs access to at least one network interface at which packets can be sent and received, and a routing table that defines the range of IP addresses reachable through that interface. Network interfaces do not have to be physical devices. In fact, the lo loopback interface available on every Linux machine (and inside each Docker container) is entirely virtual – the Linux kernel simply copies loopback packets directly from the sender's memory into the receiver's memory.

Docker uses special virtual interfaces to let containers communicate with the host machine – pairs of virtual interfaces called 'peers' that are linked inside of the host machine's kernel so that packets can travel between them. They are simple to create, as we will see in a moment.

The steps with which Docker configures a container are:

- Create a pair of peer virtual interfaces.

Give one of them a unique name like

veth65f9, keep it inside of the main Docker host, and bind it todocker0or whatever bridge Docker is supposed to be using.Toss the other interface over the wall into the new container (which will already have been provided with an

lointerface) and rename it to the much prettier nameeth0since, inside of the container's separate and unique network interface namespace, there are no physical interfaces with which this name could collide.Set the interface's MAC address according to the

--mac-addressparameter or generate a random one.- Give the container's

eth0a new IP address from within the bridge's range of network addresses. The default route is set to the IP address passed to the Docker daemon using the--default-gatewayoption if specified, otherwise to the IP address that the Docker host owns on the bridge. The MAC address is generated from the IP address unless otherwise specified. This prevents ARP cache invalidation problems, when a new container comes up with an IP used in the past by another container with another MAC.

With these steps complete, the container now possesses an eth0 (virtual) network card and will find itself able to communicate with other containers and the rest of the Internet.

You can opt out of the above process for a particular container by giving the --net= option to docker run, which takes these possible values.

--net=bridge– The default action, that connects the container to the Docker bridge as described above.--net=host– Tells Docker to skip placing the container inside of a separate network stack. In essence, this choice tells Docker to not containerize the container's networking! While container processes will still be confined to their own filesystem and process list and resource limits, a quickip addrcommand will show you that, network-wise, they live 'outside' in the main Docker host and have full access to its network interfaces. Note that this does not let the container reconfigure the host network stack – that would require--privileged=true– but it does let container processes open low-numbered ports like any other root process. It also allows the container to access local network services like D-bus. This can lead to processes in the container being able to do unexpected things like restart your computer. You should use this option with caution.--net=container:NAME_or_ID– Tells Docker to put this container's processes inside of the network stack that has already been created inside of another container. The new container's processes will be confined to their own filesystem and process list and resource limits, but will share the same IP address and port numbers as the first container, and processes on the two containers will be able to connect to each other over the loopback interface.--net=none– Tells Docker to put the container inside of its own network stack but not to take any steps to configure its network, leaving you free to build any of the custom configurations explored in the last few sections of this document.--net=|– Tells Docker to connect the container to a user-defined network.

Manually network

To get an idea of the steps that are necessary if you use --net=none as described in that last bullet point, here are the commands that you would run to reach roughly the same configuration as if you had let Docker do all of the configuration:

At this point your container should be able to perform networking operations as usual.

When you finally exit the shell and Docker cleans up the container, the network namespace is destroyed along with our virtual eth0 – whose destruction in turn destroys interface A out in the Docker host and automatically un-registers it from the docker0 bridge. So everything gets cleaned up without our having to run any extra commands! Well, almost everything:

Also note that while the script above used modern ip command instead of old deprecated wrappers like ipconfig and route, these older commands would also have worked inside of our container. The ip addr command can be typed as ip a if you are in a hurry.

Finally, note the importance of the ip netns exec command, which let us reach inside and configure a network namespace as root. The same commands would not have worked if run inside of the container, because part of safe containerization is that Docker strips container processes of the right to configure their own networks. Using ip netns exec is what let us finish up the configuration without having to take the dangerous step of running the container itself with --privileged=true.

Introduction

In part 1 of this blog post we have seen how Docker creates a dedicated namespace for the overlay and connect the containers to this namespace. In part 2 we have looked in details at how Docker uses VXLAN to tunnel traffic between the hosts in the overlay. In this third post, we will see how we can create our own overlay with standard Linux commands.

Manual overlay creation

If you have tried the commands from the first two posts, you need to clean-up your Docker hosts by removing all our containers and the overlay network:

The first thing we are going to do now is to create an network namespace called 'overns':

Now we are going to create a bridge in this namespace, give it an IP address and bring the interface up:

The next step is to create a VXLAN interface and attach it to the bridge:

The most important command so far is the creation of the VXLAN interface. We configured it to use VXLAN id 42 and to tunnel traffic on the standard VXLAN port. The proxy option allows the vxlan interface to answer ARP queries (we have seen it in part 2). We will discuss the learning option later in this post. Notice that we did not create the VXLAN interface inside the namespace but on the host and then moved it to the namespace. This is necessary so the VXLAN interface can keep a link with our main host interface and send traffic over the network. If we had created the interface inside the namespace (like we did for br0) we would not have been able to send traffic outside the namespace.

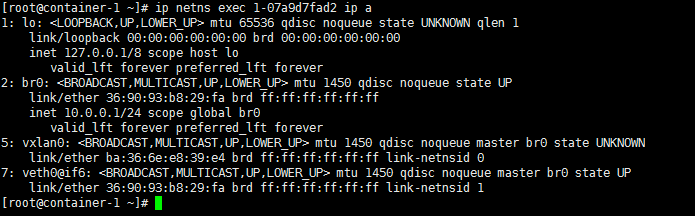

Once we have run these commands on both docker0 and docker1, here is what we have:

Now we will create containers and connect them to our bridge. Let's start with docker0. First, we create a container:

We will need the path of the network namespace for this container. We can find it by inspecting the container.

Our container has no network connectivity because of the --net=none option. We now create a veth and move one of its endpoints (veth1) to our overlay network namespace, attach it to the bridge and bring it up.

The first command uses an MTU of 1450 which is necessary due to the overhead added by the VXLAN header.

The last step is to configure veth2: send it to our container network namespace and configure it with a MAC address (02:42:c0:a8:00:02) and an IP address (192.168.0.2):

The symbolic link in /var/run/netns is required so we can use the native ip netns commands (to move the interface to the container network namespace). We used the same addressing schem as Docker: the last 4 bytes of the MAC address match the IP address of the container and the second one is the VXLAN id.

We have to do the same on docker1 with different MAC and IP addresses (02:42:c0:a8:00:03 and 192.168.0.3). If you use the terraform stack from the github repository, there is a helper shell script to attach the container to the overlay. We can use it on docker1:

The first parameter is the name of the container to attach and the second one the final digit of the MAC/IP addresses.

Here is the setup we have gotten to:

Now that our containers are configured, we can test connectivity:

We are not able to ping yet. Let's try to understand why by looking at the ARP entries in the container and in the overlay namespace:

Both commands do not return any result: they do not know what is the MAC address associated with IP 192.168.0.3. We can verify that our command is generating an ARP query by running tcpdump in the overlay namespace:

If we rerun the ping command from another terminal, here is the tcpdump output we get:

The ARP query is broadcasted and received by our overlay namespace but does not receive any answer. We have seen in part 2 that the Docker daemon populates the ARP and FDB tables and makes use of the proxy option of the VXLAN interface to answer these queries. We configured our interface with this option so we can do the same by simply populating the ARP and FDB entries in the overlay namespace:

The first command creates the ARP entry for 192.168.0.3 and the second one configures the forwarding table by telling it the MAC address is accessible using the VXLAN interface, with VXLAN id 42 and on host 10.0.0.11.

Do we have connectivity?

No yet, which makes sense because we have not configured docker1: the ICMP request is received by the container on docker1 but it does not know how to answer. We can verify this on docker1:

The first command shows, as expected, that we do not have any ARP information on 192.168.0.3. The output of the second command is more surprising because we can see the entry in the forwarding database for our container on docker0. What happened is the following: when the ICMP request reached the interface, the entry was 'learned' and added to the database. This behavior is made possible by the 'learning' option of the VXLAN interface. Let's add the ARP information on docker1 and verify that we can now ping:

We have successfuly built an overlay with standard Linux commands:

Dynamic container discovery

With these steps complete, the container now possesses an eth0 (virtual) network card and will find itself able to communicate with other containers and the rest of the Internet.

You can opt out of the above process for a particular container by giving the --net= option to docker run, which takes these possible values.

--net=bridge– The default action, that connects the container to the Docker bridge as described above.--net=host– Tells Docker to skip placing the container inside of a separate network stack. In essence, this choice tells Docker to not containerize the container's networking! While container processes will still be confined to their own filesystem and process list and resource limits, a quickip addrcommand will show you that, network-wise, they live 'outside' in the main Docker host and have full access to its network interfaces. Note that this does not let the container reconfigure the host network stack – that would require--privileged=true– but it does let container processes open low-numbered ports like any other root process. It also allows the container to access local network services like D-bus. This can lead to processes in the container being able to do unexpected things like restart your computer. You should use this option with caution.--net=container:NAME_or_ID– Tells Docker to put this container's processes inside of the network stack that has already been created inside of another container. The new container's processes will be confined to their own filesystem and process list and resource limits, but will share the same IP address and port numbers as the first container, and processes on the two containers will be able to connect to each other over the loopback interface.--net=none– Tells Docker to put the container inside of its own network stack but not to take any steps to configure its network, leaving you free to build any of the custom configurations explored in the last few sections of this document.--net=|– Tells Docker to connect the container to a user-defined network.

Manually network

To get an idea of the steps that are necessary if you use --net=none as described in that last bullet point, here are the commands that you would run to reach roughly the same configuration as if you had let Docker do all of the configuration:

At this point your container should be able to perform networking operations as usual.

When you finally exit the shell and Docker cleans up the container, the network namespace is destroyed along with our virtual eth0 – whose destruction in turn destroys interface A out in the Docker host and automatically un-registers it from the docker0 bridge. So everything gets cleaned up without our having to run any extra commands! Well, almost everything:

Also note that while the script above used modern ip command instead of old deprecated wrappers like ipconfig and route, these older commands would also have worked inside of our container. The ip addr command can be typed as ip a if you are in a hurry.

Finally, note the importance of the ip netns exec command, which let us reach inside and configure a network namespace as root. The same commands would not have worked if run inside of the container, because part of safe containerization is that Docker strips container processes of the right to configure their own networks. Using ip netns exec is what let us finish up the configuration without having to take the dangerous step of running the container itself with --privileged=true.

Introduction

In part 1 of this blog post we have seen how Docker creates a dedicated namespace for the overlay and connect the containers to this namespace. In part 2 we have looked in details at how Docker uses VXLAN to tunnel traffic between the hosts in the overlay. In this third post, we will see how we can create our own overlay with standard Linux commands.

Manual overlay creation

If you have tried the commands from the first two posts, you need to clean-up your Docker hosts by removing all our containers and the overlay network:

The first thing we are going to do now is to create an network namespace called 'overns':

Now we are going to create a bridge in this namespace, give it an IP address and bring the interface up:

The next step is to create a VXLAN interface and attach it to the bridge:

The most important command so far is the creation of the VXLAN interface. We configured it to use VXLAN id 42 and to tunnel traffic on the standard VXLAN port. The proxy option allows the vxlan interface to answer ARP queries (we have seen it in part 2). We will discuss the learning option later in this post. Notice that we did not create the VXLAN interface inside the namespace but on the host and then moved it to the namespace. This is necessary so the VXLAN interface can keep a link with our main host interface and send traffic over the network. If we had created the interface inside the namespace (like we did for br0) we would not have been able to send traffic outside the namespace.

Once we have run these commands on both docker0 and docker1, here is what we have:

Now we will create containers and connect them to our bridge. Let's start with docker0. First, we create a container:

We will need the path of the network namespace for this container. We can find it by inspecting the container.

Our container has no network connectivity because of the --net=none option. We now create a veth and move one of its endpoints (veth1) to our overlay network namespace, attach it to the bridge and bring it up.

The first command uses an MTU of 1450 which is necessary due to the overhead added by the VXLAN header.

The last step is to configure veth2: send it to our container network namespace and configure it with a MAC address (02:42:c0:a8:00:02) and an IP address (192.168.0.2):

The symbolic link in /var/run/netns is required so we can use the native ip netns commands (to move the interface to the container network namespace). We used the same addressing schem as Docker: the last 4 bytes of the MAC address match the IP address of the container and the second one is the VXLAN id.

We have to do the same on docker1 with different MAC and IP addresses (02:42:c0:a8:00:03 and 192.168.0.3). If you use the terraform stack from the github repository, there is a helper shell script to attach the container to the overlay. We can use it on docker1:

The first parameter is the name of the container to attach and the second one the final digit of the MAC/IP addresses.

Here is the setup we have gotten to:

Now that our containers are configured, we can test connectivity:

We are not able to ping yet. Let's try to understand why by looking at the ARP entries in the container and in the overlay namespace:

Both commands do not return any result: they do not know what is the MAC address associated with IP 192.168.0.3. We can verify that our command is generating an ARP query by running tcpdump in the overlay namespace:

If we rerun the ping command from another terminal, here is the tcpdump output we get:

The ARP query is broadcasted and received by our overlay namespace but does not receive any answer. We have seen in part 2 that the Docker daemon populates the ARP and FDB tables and makes use of the proxy option of the VXLAN interface to answer these queries. We configured our interface with this option so we can do the same by simply populating the ARP and FDB entries in the overlay namespace:

The first command creates the ARP entry for 192.168.0.3 and the second one configures the forwarding table by telling it the MAC address is accessible using the VXLAN interface, with VXLAN id 42 and on host 10.0.0.11.

Do we have connectivity?

No yet, which makes sense because we have not configured docker1: the ICMP request is received by the container on docker1 but it does not know how to answer. We can verify this on docker1:

The first command shows, as expected, that we do not have any ARP information on 192.168.0.3. The output of the second command is more surprising because we can see the entry in the forwarding database for our container on docker0. What happened is the following: when the ICMP request reached the interface, the entry was 'learned' and added to the database. This behavior is made possible by the 'learning' option of the VXLAN interface. Let's add the ARP information on docker1 and verify that we can now ping:

We have successfuly built an overlay with standard Linux commands:

Dynamic container discovery

We have just created an overlay from scratch. However, we need to manually create ARP and FDB entries for containers to talk to each other. We will now look at how this discovery process can be automated.

Let us first clean up our setup to start from scratch:

Catching network events: NETLINK

Netlink is used to transfer information between the kernel and user-space processes: https://en.wikipedia.org/wiki/Netlink. iproute2, which we used earlier to configure interfaces, relies on Netlink to get/send configuration information to the kernel. It consists of multiple protocols ('families') to communicate with different kernel components. The most common protocol is NETLINK_ROUTE which is the interface for routing and link configuration.

For each protocol, Netlink messages are organized by groups, for example for NETLINK_ROUTE you have:

- RTMGRP_LINK: link related messages

- RTMGRP_NEIGH: neighbor related messages

- many others

For each group, you then have multiple notifications, for example:

- RTMGRP_LINK:

- RTM_NEWLINK: A link was created

- RTM_DELLINK: A link was deleted

- RTMGRP_NEIGH:

- RTM_NEWNEIGH: A neighbor was added

- RTM_DELNEIGH: A neighbor was deleted

- RTM_GETNEIGH: The kernel is looking for a neighbor

I described the messages received in userspace when the kernel is sending notifications for these events, but similar messages can be sent to the kernel to configure links or neighbors.

iproute2 allows us to listen to Netlink events using the monitor subcommand. If we want to monitor for link information for instance:

In another terminal on docker0, we can create a link and then delete it:

On the first terminal we can see some output.

When we created the interfaces:

Nets Docker For Mac Installer

When we removed them:

We can use this command to monitor other events:

In another terminal:

We get the following output:

In our case we are interested in neighbor events, in particular for RTM_GETNEIGH which are generated when the kernel does not have neighbor information and sends this notification to userspace so an application can create it. By default, this event is not sent to userspace but we can enable it and monitor neighbor notifications:

This setting will not be necessary afterwards because the l2miss and l3miss options of our vxlan interface will generate the RTM_GETNEIGH events.

In a second terminal, we can now trigger the generation of the GETNEIGH event:

Here is the output we get:

We can use the same command in containers attached to our overlay. Let's create an overlay and attach a container to it.

The two shell scripts are available on the github repo.

Download imovie for mac 10.12.6. Apple disclaims any and all liability for the acts, omissions and conduct of any third parties in connection with or related to your use of the site. Apple may provide or recommend responses as a possible solution based on the information provided; every potential issue may involve several factors not detailed in the conversations captured in an electronic forum and Apple can therefore provide no guarantee as to the efficacy of any proposed solutions on the community forums.

create-overlay creates an overlay called overns using the commands presented earlier:

attach-ctn attaches a container to the overlay. The first parameter is the name of the container and the second one the last byte of its IP address:

We can now run ip monitor in the container:

In a second terminal, we can ping an unknown host to generate GETNEIGH events:

In the first terminal we can see the neighbor events:

We can also look in the network namespace of the overlay:

This event is slightly different because it is generated by the vxlan interface (because we created the interface with the l2miss and l3miss options). Let's add the neighbor entry to the overlay namespace:

If we run the ip monitor neigh command and try to ping from the other terminal, here is what we get:

Now that we have the ARP information, we are getting an L2miss because we do not know where the mac address is located in the overlay. Let's add this information:

If we run the ip monitor neigh command again and try to ping we will not see neighbor events anymore.

The ip monitor command is very useful to see what is happening but in our case we want to catch these events to populate L2 and L3 information so we need to interact with them programmatically.

Here is simple python to subscribe to Netlink messages and decode GETNEIGH events:

This script only contains the interesting lines, the full one is available on the github repository. Let's go quickly through the most important part of the script. First, we create the NETLINK socket, configure it for NETLINK_ROUTE protocol and subscribe to the neighbor event group (RTMGRP_NEIGH):

The we decode the message and filter to only process GETNEIGH messages:

To understand how the message is decoded, here is a representation of the message. The Netlink header is represented in orange:Once we have a GETNEIGH message we can decode the ndmsg header (in blue):

This header is followed by an rtattr structure, which contains the data we are interested in. First we decode the header of the structure (purple):

We can receive two different types of messages:

- NDA_DST: L3 miss, the kernel is looking for the mac address associated with the IP in the data field (4 data bytes after the rta header)

- NDA_LLADDR: L2 miss, the kernel is looking for the vxlan host for the MAC address in the data field (6 data bytes after the rta header)

We can try this script in our overlay (we recreate everything to start with a clean environment):

If we try to ping from another terminal:

Here is the output we get:

If we add the neighbor information and ping again:

We now get an L2 miss because we have added the L3 information.

Dynamic discovery with Consul

Now that we have seen how we can be notified of L2 and L3 misses and catch these events in python, we will store all L2 and L3 data in Consul and add the entries in the overlay namespace when we get a neighbor event.

First, we are going to create the entries in Consul. We can do this using the web interface or curl:

We create two types of entries:

- ARP: using the keys demo/arp/{IP address} with the MAC address as the value

- FIB: using the keys demo/arp/{MAC address} with the IP address of the server in the overlay hosting this Mac address

In the web interface, we get this for ARP keys:

Now we just need to lookup data when we receive a GETNEIGH event and populate the ARP or FIB tables using Consul data. Here is a (slightly simplified) python script which does this:

This full version of this script is also available on the github repository mentionned earlier. Here is a quick explanation of what it does:

Instead of processing Netlink messages manually, we use the pyroute2 library. This library will parse Netlink messages and allow us to send Netlink messages to configure ARP/FIB entries. In addition, we bind the Netlink socket in the overlay namespace. We could use the ip netns command to start the script in the namespace, but we also need to access Consul from the script to get configuration data. To achieve this, we will run the script in the host network namespace and bind the Netlink socket in the overlay namespace:

We will now wait for GETNEIGH events:

We retrieve the index of the interface and its name (for logging purposes):

Now, if the message is an L3 miss, we get the IP address from the Netlink message payload and try to look up the associated ARP entry from Consul. If we find it, we add the neighbor entry to the overlay namespace by sending a Netlink message to the kernel with the relevant information.

If the message is an L2 miss, we do the same with the FIB data.

Let's now try this script. First, we will clean up everything and recreate the overlay namespace and containers:

Company of Heroes v2.300 MULTI No-DVD/Fixed EXE #2. Company of Heroes + Opposing Fronts + Tales of Valor v2.700 +1 TRAINER. Mount the COMPANY OF HEROES.ISO. Oct 19, 2006 Company of Heroes: Opposing Fronts All ENG Company of Heroes v2.102 All Company of Heroes: Opposing Fronts v2.103 All Company of Heroes v1.71 All Company of Heroes v2.201 All Company of Heroes v2.202 All Company of Heroes: Opposing Fronts v2.300 All Company of Heroes: Opposing Fronts v2.301 All Company of Heroes: Complete Edition v2.700.2.42. Jan 03, 2018 Company Of Heroes Opposing Fronts Crack No Cd DOWNLOAD b89f1c4981 A,few,months,ago,I,uninstalled,Company,of,Heroes.,Company,of,Heroes:,Opposing,Fronts,CD,Key. Company of Heroes: Opposing Fronts GERMAN No-DVD/Fixed Image Game Trainers & Unlockers: Company of Heroes: Opposing Fronts v2.700.0.215 - v2.700.2.30 +2 TRAINER. Crack no dvd company of heroes opposing fronts iso game.

If we try to ping the container on docker1 from docker0, it will not work because we have no ARP/FIB data yet:

We will now start our script on both hosts:

What is marriage certificate serial number. And try pinging again (from another terminal on docker0):

Here is the output we get the python script on docker0:

First, we get an L3 miss (no ARP data for 192.168.0.3), we query Consul to find the Mac address and populate the neighbor table. Then we receive an L2 miss (no FIB information for 02:42:c0:a8:00:03), we look up this Mac address in Consul and populate the forwarding database.

On docker1, we see a similar output but we only get the L3 miss because the L2 forwarding data is learned by the overlay namespace when the ICMP request packet gets to the overlay.

Here is an overview of what we built:

Nets Docker For Mac Os

Conclusion

Nets Docker For Mac Download

This concludes our three part blog post on the Docker overlay. Do not hesitate to ping me (on twitter for instance) if you see some mistakes/inaccuracies or if some part of the posts are not clear. I will do my best to amend these posts quickly.